Artificial Intelligence is a present scenario de facto standard. It would be hard-pressed to identify an application where AI has no presence. Data organization, Cybersecurity, Speculations, Financial predictions, Knowledge extraction… The list is endless.

But, do remember, just like AI can be leveraged for good, it can also be used in a negative sense. Some common ways in which hackers can use AI are-

- To make attacks more intelligent

- To figure out better ways of exploitation

- To decide what, when, how, or who to attack

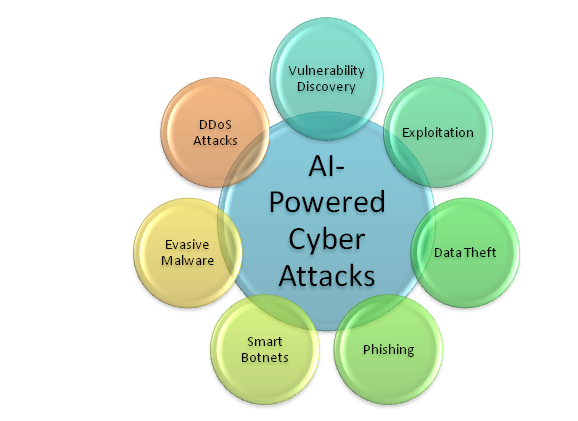

7 Scenarios Where Attackers Can Use AI to Make the Breach

Scenario 1: Vulnerability Discovery

It is a vulnerability discovery part for attackers. Here, attackers find out the issues inside the system to break it. Typically, there are two ways to identify:

- Use known payloads and check known issues: It is quite easy to use. It is just like a checklist. Attackers follow a checklist and check all items (issues and corresponding) one by one.

- Generate new payloads to find out new issues: It is entirely different from the usual approach. Attackers first have to generate unusual behavior and then use it to discover payload. How attackers generate it? Attackers put the unusual data in request fields and allow target service to create an abnormal response. This is where Artificial Intelligence comes into existence. It analyses already revealed payloads for accessible vulnerabilities and proposes new payloads to find out new issues with improved probability.

Scenario 2: Exploitation

In this situation, three elements play a vital role. These factors help to get access or cause adverse impacts. Following are:

- Experience

- Knowledge

- Previously revealed vulnerability

Attackers automate this process by coding each exploit step-by-step for recognized issues.

But what if the vulnerability is new? This is when Artificial Intelligence comes into the picture. It helps attackers find out the right approach to generate an exploit to break into a particular application/system/environment/infrastructure. (Pro Benefit: Compared to a human, the machine runs much faster so can adapt an exploit quickly)

Scenario 3: Data Theft

It is the pay-dirt part of the attackers. In this case, attackers find out some publically exposed critical user information (like credit card details, SSNs, user emails and passwords) and use it for their own benefit.

Sometimes it is even challenging for attackers to steal the data due to the number of outbound filters installed in the victim’s infrastructure. Since AI is excellent for searching, so attackers can use it to search and classify the data.

Scenario 4: Phishing

One of the most common applications of machine learning is using algorithms like text-to-speech, natural language processing (NLP), and speech recognition. It understands the software writing styles through recurring neural networks and ensures smarter social engineering.

But this is just one face of the coin. Another side: attackers can use machine learning for advanced spear phishing emails. That means attackers can train the system on genuine emails and further can use it to make convincing emails for targeted audiences to phish their system. McAfee Labs’ predictions for 2017 prove this fact.

Scenario 5: Smart Botnets

According to research, 2018 is the year of self-learning ‘swarm-bots’ and ‘hivenets’. It means intelligent IoT devices can be trained to make attacks scalable.

How?

Simple: the devices can talk to each other and make decisions based on shared local intelligence.

Further, the research also uncovers that zombies will be smarter and free from botnet herder intervention to take action on commands. As a result, there will be an exponential growth of hivenets as swarms.

Interestingly, this will widen the capability to attack several victims simultaneously and will help to impede response and mitigation drastically.

Scenario 6: Evasive Malware

Apparently, till yet, cybercriminals are using a manual process to create malware. Attackers are writing scripts to make Trojans, Viruses and leveraging tools like password scrapers and a rootkit to distribute and execute.

But, the day is not so far when these intruders can use machine learning to create malware. The classic example: Endgame. The security company, at the 2017 DEFCON conference revealed how they created a custom-designed malware using Elon Musk’s OpenAI framework to check whether security engines can detect malware or not.

Unfortunately, the security engine failed to detect it. The malicious content bypasses the engines by changing a few parts and making code trustworthy to anti-viruses.

Another example: Generating Adversarial Malware Examples for Black-Box Attacks Based on GAN. All these prove that machine learning can modify code on the fly.

Scenario 7: DDoS Attacks

Remember Mirai Botnet! It clearly states how IoT magnifies the devastating blow of a DDoS attack. Now, with the advances in Artificial Intelligence, it is going to play an active role in mounting attacks.

Why and How?

Have a look:

Launching a DDoS is quite easy. Anyone can download a free tool and take your company potentially down.

If you are a small-scale company and you have a very generic website without added protection, it is further easy as you are susceptible. While on the one hand, these easy tools can generate massive traffic or volume, on the other hand, these can result in botnet creation like Mirai. A big campaign using multiple compromised IoT devices can scale up to 1 TB of data to flood any service or system.

The Final Thoughts

Artificial Intelligence (AI) exploits are not only to discover new approaches to identify vulnerabilities. It is also to find out which data is more critical to a breach. And soon, it can be used to generate new exploitation ways for these issues instead of just a process to speed up a human-defined attack.